Introduction

In the last article, I took a look at using Github actions and Conventional Commits to help package and release APIs on Github that follow semantic versioning.

I posted the Github Action YAML file that had one job: "release".

Although this is a useful action, it's considered one of (if not the) last steps in a continuous integration and continuous deployment pipeline. There are other steps—before release—that are equally important. The first step in the deployment process, building and unit testing, occurs after a developer pushes the code to the repository.

This article discusses another Github Action that targets unit testing and installing dependencies when developers create pull requests. Pull requests let maintainers review, test, and decide if code should become part of the Git repository. Fortunately, we can save ourselves time when reviewing code if we perform static testing and unit testing before our eyes even meet the changes.

The script and topics discussed are not universal. The project uses Python and the FastAPI framework, so the code and libraries will reflect those technologies.

Unit Tests with FastAPI

FastAPI's documentation has a section on unit testing and advanced guides to override a database connection.

It's a great introduction, but you'll also need experience with pytest to improve test performance and efficiency.

Similar to the documentation, I have a basic index route in my main.py file.

# main.py

from fastapi import FastAPI

app = FastAPI()

@app.get("/", tags=["root"])

async def read_root() -> dict:

return {"message": "Welcome to your todo list."}Therefore, I can create a file next to main.py, named test_main.py and add code to test the return value.

# test_main.py

from fastapi.testclient import TestClient

from main import app

client = TestClient(app)

def test_read_main():

response = client.get("/")

assert response.status_code == 200

message = response.json()["message"]

assert len(message) < 40The TestClient calls our FastAPI app, and then we can perform assert statements on the result. Let's use this single test to make sure that we set up the Github Action correctly. Then, later I'll come back and show a more advanced use case that uses a pytest fixture for authentication.

Continuous Integration for FastAPI

To clarify, this setup requires that the version control software for the project is Github because we're using a Github Action. If you followed the last article, then you understand where to put the Github action YAML file in your project.

The action that I am about to describe executes when a pull request (PR) is opened.

name: Pull Request

on:

pull_request:

branches:

- master

jobs:

test:

runs-on: ubuntu-latest

strategy:

matrix:

python-version: [3.9]

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install flake8

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

- name: Static Code Linting with flake8

run: |

# stop the build if there are Python syntax errors or undefined names

flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

# exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

- name: Unit Testing with pytest

env:

# add environment variables for tests

run: |

pytest

Let's walk through the script:

- The action is given the name Pull Request

- It runs when a PR is opened on the master branch

- There is one job for the action: test

- The script runs on ubuntu-latest and uses Python version 3.9

- The action gets the code from the PR

- It sets up Python and installs the dependencies specified in the requirements.txt file (be sure to develop your app with a virtual environment enabled)

- Then, it uses flake8 to perform static linting (catching things like undefined variables, etc.)

- If the dependencies install and static linting passes, it runs the

pytestcommand, consequently running the unit tests we define.

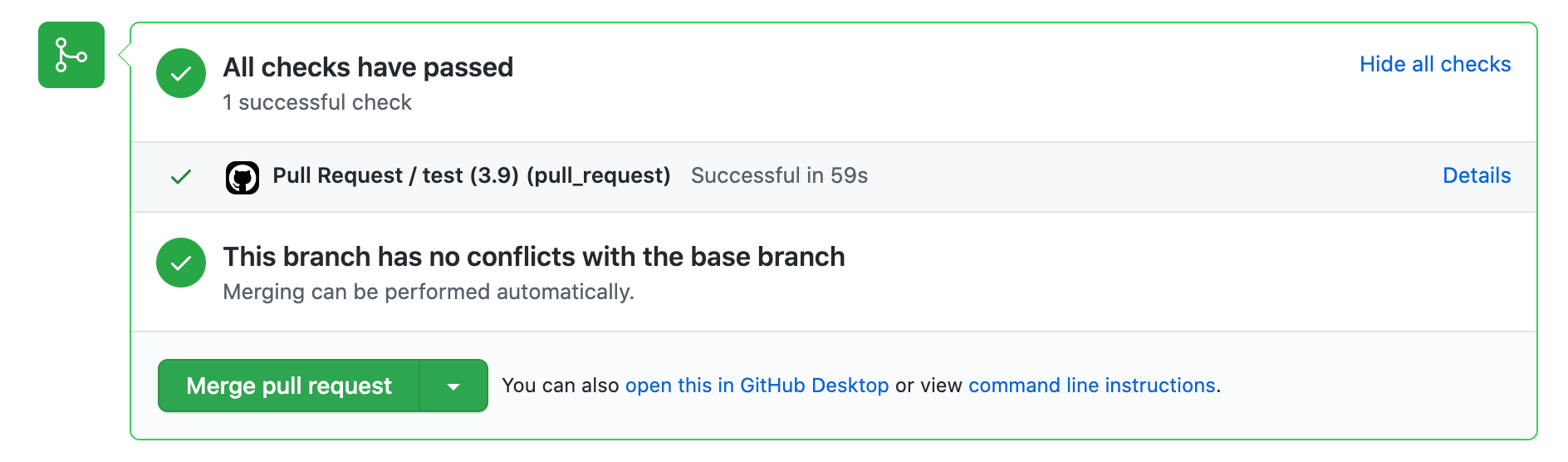

A green check will appear on the PR page, so reviewers know it's ready for review when the action runs successfully. If it doesn't run successfully, the creator needs to push new commits that address the failed tests.

Any push to the PR branch triggers the action.

Try It Out

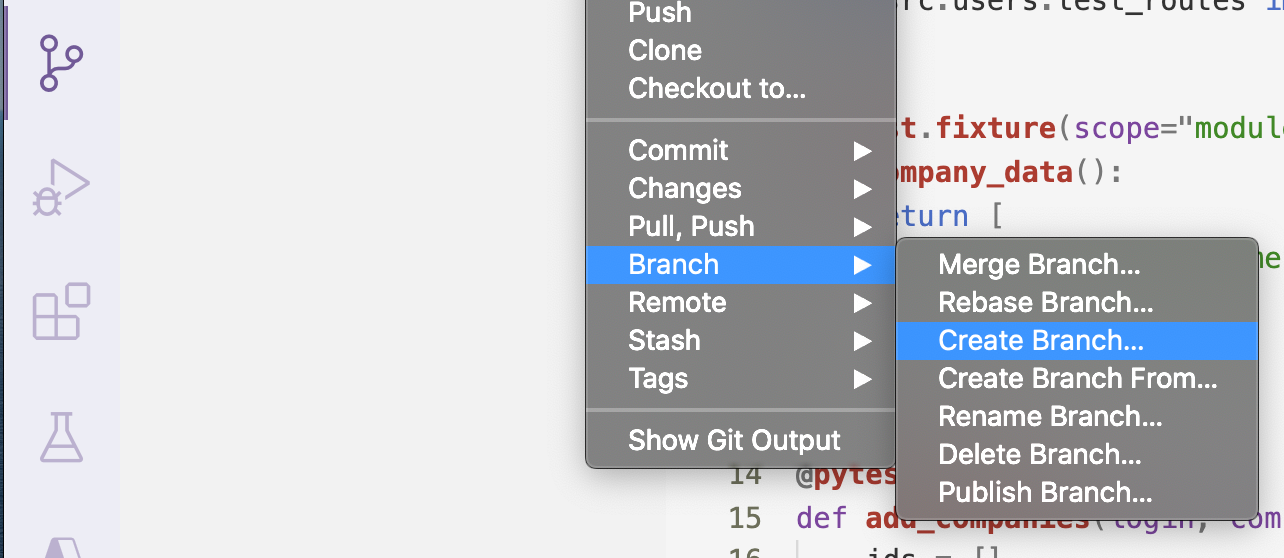

First, let's create a new branch. I'm going to do this in VSCode.

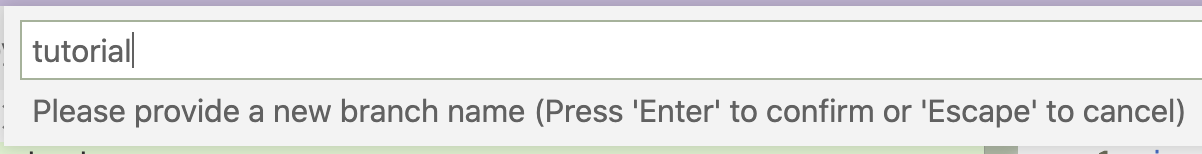

Name the branch whatever you would like.

Then, return a string for the index route that is longer than 40 characters.

# main.py

from fastapi import FastAPI

app = FastAPI()

@app.get("/", tags=["root"])

async def read_root() -> dict:

return {"message": "Welcome to your todo list. Using this app will make you so productive you're going to ask other people if you can do their todos!"}Save the file.

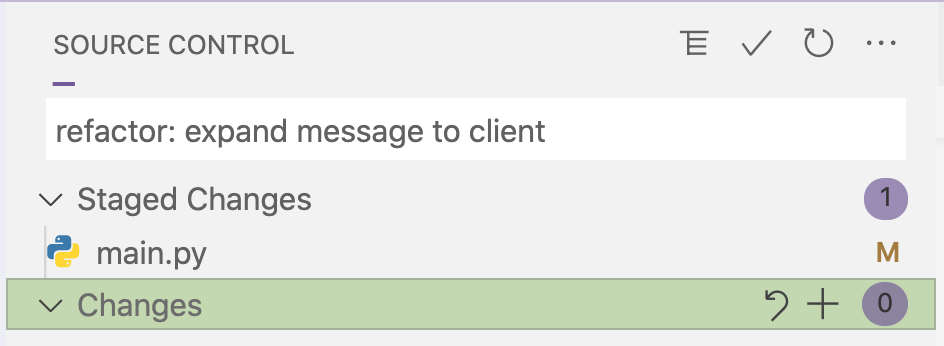

Commit the change (using conventional commit syntax).

refactor: expand message to client

Push the changes to the repository on the command line.

git push --set-upstream origin tutorial

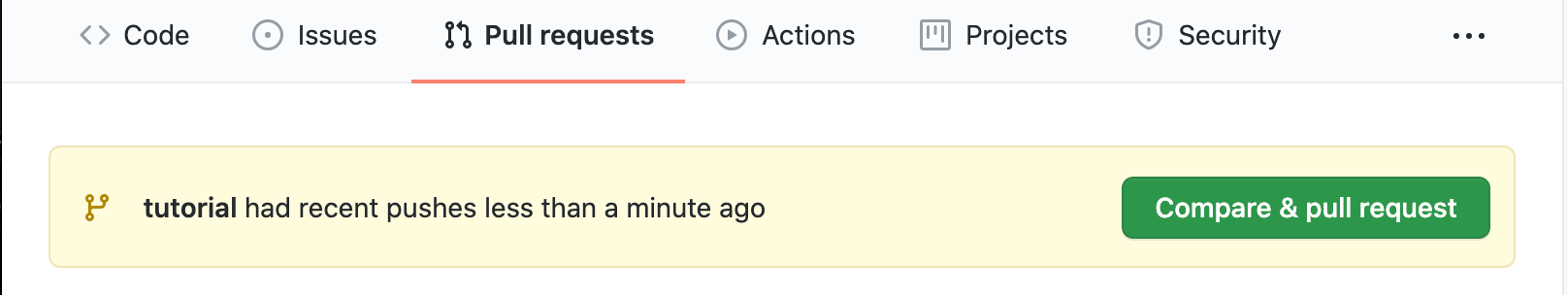

Next, open up a new pull request.

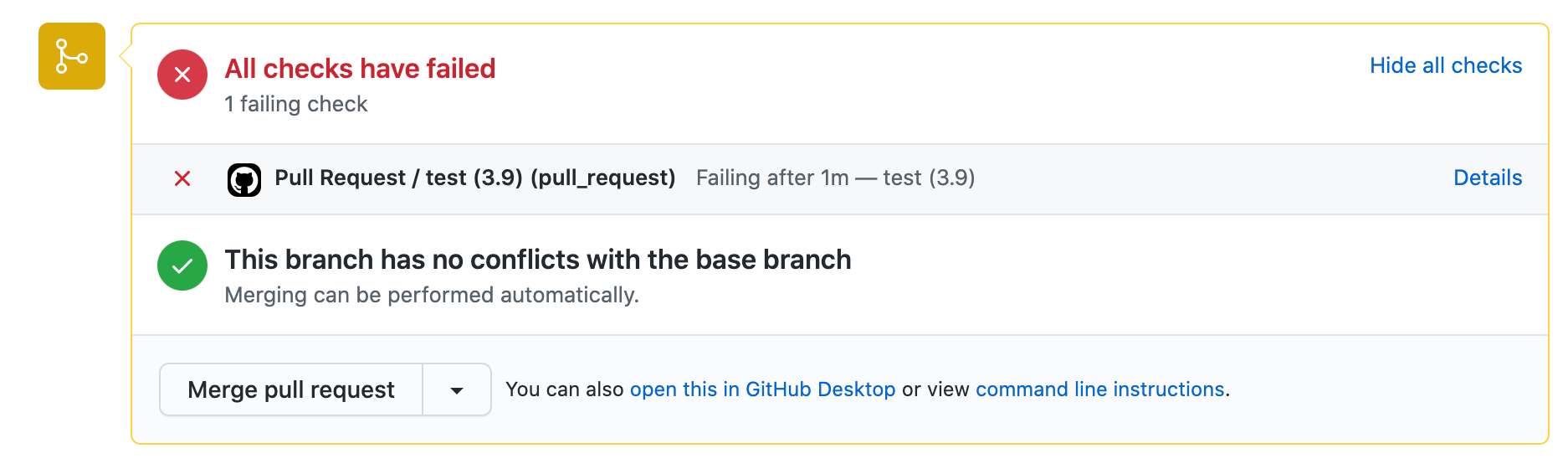

The action runs and fails.

Fair enough, let's go back into the code and shorten the message.

# main.py

from fastapi import FastAPI

app = FastAPI()

@app.get("/", tags=["root"])

async def read_root() -> dict:

return {"message": "Welcome to your todo list. Use it!"}Again, save the file, commit the change, and push upstream.

Perfect! This code is integrated and ready for review/merge.

Extra: Unit Testing in FastAPI

We walk a fine line between mixing our unit and functional tests when using the TestClient in FastAPI. It can be tempting to string requests or tests together. However, we need to keep our unit tests tight and follow the steps outlined in pytest's documentation.

- Arrange

- Act

- Assert

- Cleanup

Conversely, testing over a network is time-consuming. So, we can use pytest fixtures in two ways:

- Separate tests and data

- Save resources when the opportunity is available

Let's take a look at an example of a test that requires a few network calls. Additionally, let's consider the scenario that our app has authentication or authorization protocols.

The test functions start with test_ and the fixtures have a pytest decorator above them.

First, examine the add_companies and company_data fixtures. These two fixtures have different scopes.

# companies/test_companies.py

import pytest

from test_main import client

from users.test_users import login

@pytest.fixture(scope="module")

def company_data():

return [

{"id": "jrts", "name": "JRTS", "keywords": ["nostrud ut", "do adipisicing"]},

{"id": "test inc.", "name": "Test Inc.", "keywords": []},

]

@pytest.fixture

def add_companies(login, company_data):

ids = []

for company in company_data:

res = client.put(

"/company",

headers={"Authorization": "Bearer " + login["token"]},

json=company,

)

ids.append(company["id"])

yield ids

for item in ids:

client.delete(

"/company/{item}".format(item=item),

headers={"Authorization": "Bearer " + login["token"]},

)

def test_fetch_company(login, add_companies):

"""Fetch single company document"""

id_of_any_company_added_in_fixture = add_companies[0]

response = client.get(

"/company/{id}".format(id=id_of_any_company_added_in_fixture),

headers={"Authorization": "Bearer {token}".format(token=login["token"])},

)

assert response.status_code == 200

assert "id" in response.json()

assert response.json()["id"] == id_of_any_company_added_in_fixture

def test_update_company(login, add_companies):

"""Update single company document"""

id_of_any_company_added_in_fixture = add_companies[0]

any_list_of_strings = ["this", "was", "updated"]

response = client.put(

"/company/{id}".format(id=id_of_any_company_added_in_fixture),

headers={"Authorization": "Bearer {token}".format(token=login["token"])},

json={"id": "jrts", "name": "JRTS", "keywords": any_list_of_strings},

)

assert response.status_code == 202

assert "keywords" in response.json()

assert response.json()["keywords"][0] == any_list_of_strings[0]

# ... other tests

The company_data fixture uses the module scope, so it's not destroyed until after the last test in the file.

The add_companies fixture doesn't specify a scope. Instead, it uses the default functional scope. Therefore, it's destroyed after each test. This fixture must be functional so that each test has fresh data (arrange) before executing on the company document in the database (act).

Yield

The yield keyword is a helpful feature in pytest. The function holds off on executing the rest of the function until it's time to destroy the fixture.

For example, the second part of add_companies, where we delete the fake company data from the database, executes after our tests pass or fail. This keeps test data from endlessly duplicating (clean up).

Authentication Fixture

The longest-lasting scope for a fixture is the session scope. It's destroyed at the end of the test session.

What this means is that we can get the credentials to authenticate to the API once successfully and subsequently pass those credentials to all tests in the session. This saves us countless API calls to log in and gain credentials.

# users/test_users.py

import pytest

from test_main import client

@pytest.fixture(scope="session")

def login():

response = client.post(

"/user/token",

files={

"username": (None, os.environ["TEST_USER_NAME"]),

"password": (None, os.environ["TEST_USER_PASSWORD"]),

},

)

res = response.json()

cookie = response.cookies.get("invoice_processing")

token = res["access_token"]

yield dict(cookie=cookie, token=token)

logout_response = client.get(

"/user/logout", cookies=dict(invoice_processing=cookie)

)Combining these fixtures, and their various scopes, allows us to follow good practices with our tests and keep our test suites efficient.

Conclusion

Having a reliable suite of unit tests can make our development workflow more enjoyable. In addition, combing those tests into an automatic CI/CD pipeline can make our development and deployment workflows more efficient (and enjoyable).

This article was not a comprehensive look at best practices when testing or building a CI/CD pipeline, but I hope it was a good place for you to start!